🎩 Top 5 Security and AI Reads - Week #38

Adaptive watermark evasion attacks, speech deepfake detection limitations, code metrics for vulnerability discovery, fault injection attack fundamentals, and actionable exploit assessment systems.

Welcome to the thirty-eighth instalment of the Stats and Bytes Top 5 Security and AI Reads weekly newsletter. We’re kicking off with a paper that shows how adaptive reinforcement learning can expose critical vulnerabilities in LLM watermarking schemes, achieving over 90% evasion success rates that challenge current security assumptions. Next, we have a gander at a paper that investigates why speech deepfake detectors fail in real-world deployments, introducing the concept of “coverage debt” that undermines performance across different devices and environments. We then jump into research that shows simple code metrics can match or outperform sophisticated LLMs in vulnerability detection, with the remarkable finding that counting local variables alone can achieve 91% of the performance of including all code metrics. Following that, we’ll take a look at an SoK turned beginner’s guide to fault injection attacks that demystifies this research area. We wrap up with an exploit assessment system that prioritises vulnerabilities based on the practical usability of available public exploits, shifting focus from theoretical impact to real-world threat actor capabilities.

A note on the images - I ask Claude to generate me a Stable Diffusion prompt using the titles of the 5 reads and then use the FLUX.1 [dev] on HuggingFace to generate it.

Read #1 - RLCracker: Exposing the Vulnerability of LLM Watermarks with Adaptive RL Attacks

💾: N/A 📜: arxiv 🏡: Pre-print

This should be a good read for folks who are interested in the security of watermark schemes, especially those who are using watermarks in production.

Commentary: I am not sure what I was expecting, but this paper was a bit of a surprise. The central thesis of this paper is that existing watermark resilience evaluations are not good enough and, because of this, do not highlight key vulnerabilities. They introduce adaptive robustness radius, a metric to quantify watermark resilience, and go into a fair bit of depth formally defining this and proving various properties. The key bit to take away from this is that they prove that you can reduce this radius by increasing an adversary’s capability. An adversary’s capability in this case is the model used to remove the watermark from a given output.

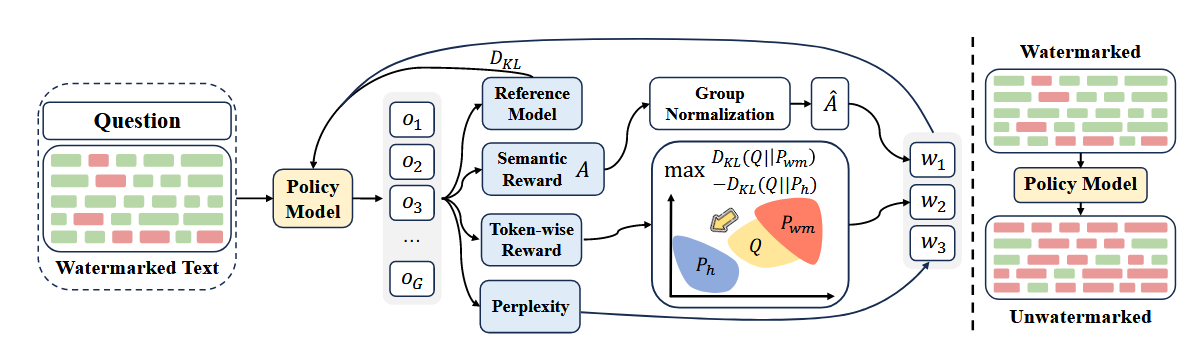

The method itself is an RL based approach that uses a combined reward function that is used to fine-tune a model to paraphrase/re-word a given watermarked input whilst still preserving semantic fidelity. The authors show that this can be done with a very small dataset (100 examples in the paper’s case). The method is basically trained to do undetectable plagiarism. The results section speaks for itself – models fine-tuned using this approach are very good at this task, like, really good, with an evasion success rate (ESR) of 90%+.

There are a couple of titbits that are highlighted towards the end of the paper that I think are worth drawing out. The first is the role of the system prompt. The authors suggest this can have a large bearing on watermark performance. I think there is some interesting research in this area in general. I can imagine the same sort of impact could be seen in tasks such as vulnerability detection. The second is the authors suggest a model’s ability to reason/paraphrase can also be leveraged to remove watermarks by basically thinking more. I couldn’t make my mind up if this was obvious or interesting. What do you think?

Read #2 - Why Speech Deepfake Detectors Won’t Generalize: The Limits of Detection in an Open World

💾: N/A 📜: arxiv 🏡: Pre-Print

This paper should be a grand read for folks interested in deepfake audio detection but also folks who are generally interested in audio-based ML.

Commentary: This paper is pretty short, but I enjoyed it nonetheless. The author’s central position is that audio-based deepfake detection approaches are evaluated on clean, benchmark-style datasets. The challenge is that the real-world deployments have a large array of factors, such as devices used, sampling rates, codecs and physical environments. They dub this coverage debt. I think this concept could be significantly generalised to other types of ML benchmark style evaluations. An awesome research project would be working out how to quantify this and identify where the missing bits are. That feels like a few PhDs’ worth of work!

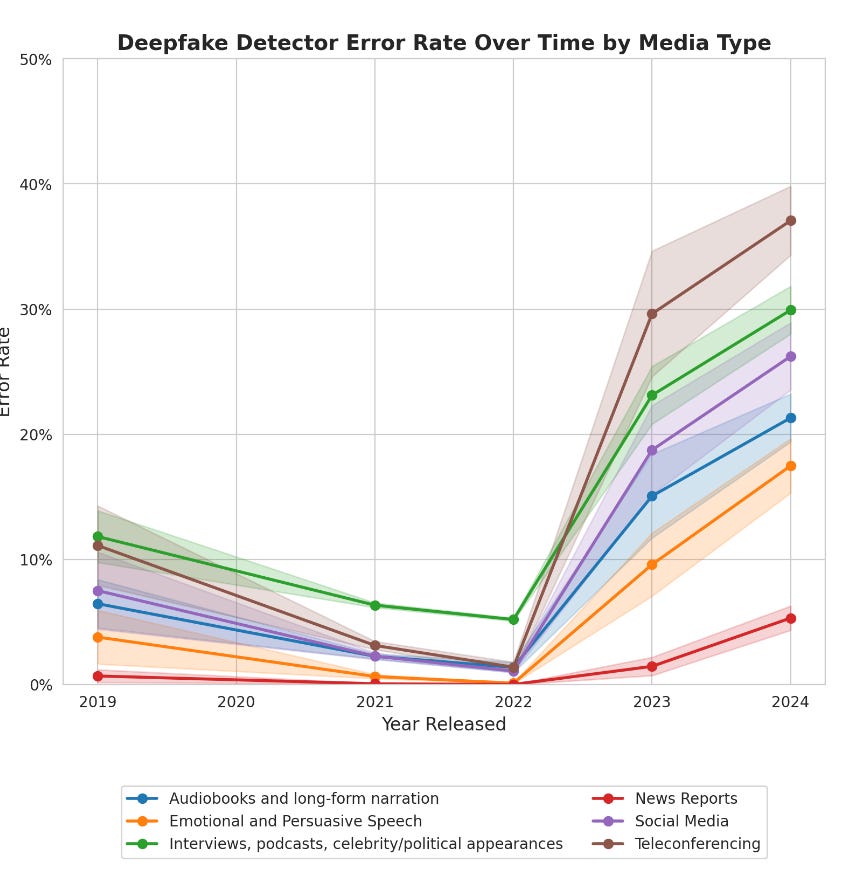

A picture speaks a thousand words, as they say. The below plot is Figure 2 from the paper and shows mean Equal Error Rate (EER). I was unfamiliar with the metric and misinterpreted this graph when I first looked at it. I thought, “Oh, the detectors got much better in 2023 and 2024!” but it’s actually the opposite; they started getting much worse.

The paper has a fairly meaty section discussing the reasons for the above performance degradation and essentially suggests that deepfake detectors are not reliable and should not be used as the single, primary defence against audio deepfake attacks. It’s almost like we need to adopt defence in depth. Who knew!

Read #3 - LLM-based Vulnerability Discovery through the Lens of Code Metrics

💾: N/A 📜: arxiv 🏡: Pre-print

This is a grand read for folks interesting in vulnerability discovery using LLM’s but also folks interested in an interesting case of where researchers have dug into the reason’s behind task performance

Commentary: This paper is a cracker. The authors seek to challenge the vulnerability discovery literature to date by suggesting (and proving) that a code metric is a suitable data representation to predict vulnerabilities. Early in the paper, the papers present results that show a model trained on code metrics is as good as or better than the best vulnerability detection LLM, a strong coding model and GPT-4o.

The authors then spend the majority of the paper investigating how this is possible and use very robust research methods. The first plot that caught my eye is the results presented in Figure 1. This shows the performance when a given metric is used on its own to train the classifier or when it’s removed and all other metrics are used. They find that the number of local variables can be used on its own to get 91% of the F1 score. What? :O The rest of the paper is full of interesting findings, such as how code metrics + code do not increase LLM performance and how medium-sized LLM predictions are correlated to code metrics but larger ones aren’t (although they have similar performance).

I have added this to the list of “Send me an email if cited”. I am looking forward to how folks respond to this and how the code (which is linked but empty) is used in others’ research!

Read #4 - SoK: A Beginner-Friendly Introduction to Fault Injection Attacks

💾: N/A 📜: arxiv 🏡: Pre-Print

This should be a grand read for folks wanting to jump into fault injection attacks.

Commentary: I found this paper pretty late in the week and didn’t get a chance to dig into it very deeply. That being said, it should be a great starting point for folks wanting to get into fault injection research. The paper has several great explanations of different fault injection attacks and should give folks a good starting point to branch out into other related areas.

Read #5 - AEAS: Actionable Exploit Assessment System

💾: N/A 📜: arxiv 🏡: Pre-Print

This should be a grand read for folks interested in vulnerability management and scoring.

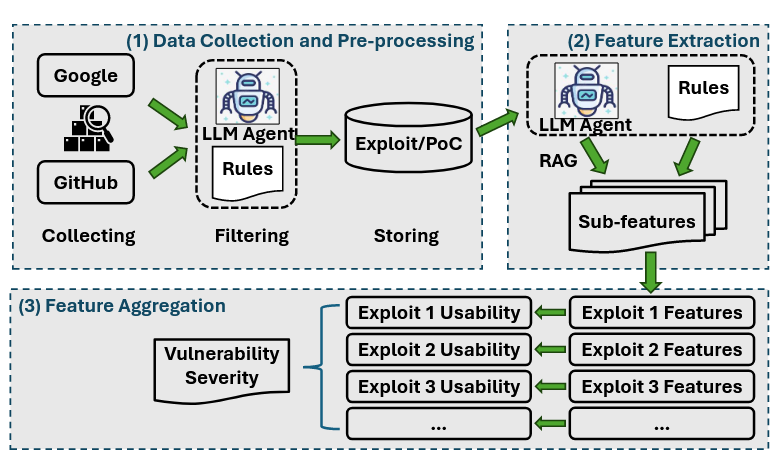

Commentary: I found this paper late in the week too, but I got to read this one a bit more deeply than the one above. This paper is pretty similar to other methods in this space – take a load of inputs from NVD or similar, pump them into an LLM and then get a new score/output out.

The bit that caught my attention with this paper is the focus on how usable the available public exploits are in terms of functionality and setup required for N-day vulnerabilities. I was on the fence on whether this is a good idea or not but actually think it’s pretty clever. If a public exploit is implemented really well and needs minimal setup, we can assume actors are using it. This means the vulnerability is a must-patch and therefore should get a high score. If it requires minimal setup and the exploit is reliable, we can also assume capable actors either had it already or could have got it themselves anyway, which equally means you need to patch it. I think an interesting concept to add would be to exploit complexity somehow. Not all actors can implement reliable exploits for complex vulnerabilities, but if a reliable exploit does drop, low-tier actors can rapidly pick them up!

Overall, this feels like the sort of approach which could be used at regular increments to update/prioritise vulnerabilities based on the public information.

That’s a wrap! Over and out.