🎩 Top 5 Security and AI Reads - Week #11

Repeated token vulnerabilities, LLM finetuning API attack vectors, effective VLM adversarial techniques, autonomous adversarial mitigation exploitation, and transformer robustness token defence

Welcome to the eleventh installment of the Stats and Bytes Top 5 Security and AI Reads weekly newsletter. We're kicking off with a deep dive into the fascinating "Repeated Token Phenomenon" in large language models, where researchers use mechanistic interpretability to identify and mitigate model vulnerabilities. Next, we'll talk about some of the main problems with protecting LLM finetuning APIs, like how bad users might be able to use proxy outputs to make backdoored models. Next, we examine a surprisingly effective attack that achieves over a 90% success rate against even the strongest black-box VLMs by cleverly transferring semantic concepts between images. We'll also look at AutoAdvExBench, a new benchmark for evaluating AI agents' ability to defeat adversarial defences, and conclude with promising research on "Robustness Tokens" that enhance transformer models' resilience against adversarial attacks.

I thought the images were starting to get a bit too doom and gloom so I changed my Claude prompt to make them more “colourful and whacky” — not sure it came out like I was expecting 😂 Moving swiftly on…

Read #1 - Interpreting the Repeated Token Phenomenon in Large Language Models

💾: GitHub 📜: arxiv 🏡: Pre-Print/Industry Report

This is a grand read for folks who are interested in how mechanistic interpretability approaches can be used to identify a model vulnerability as well as develop a mitigation.

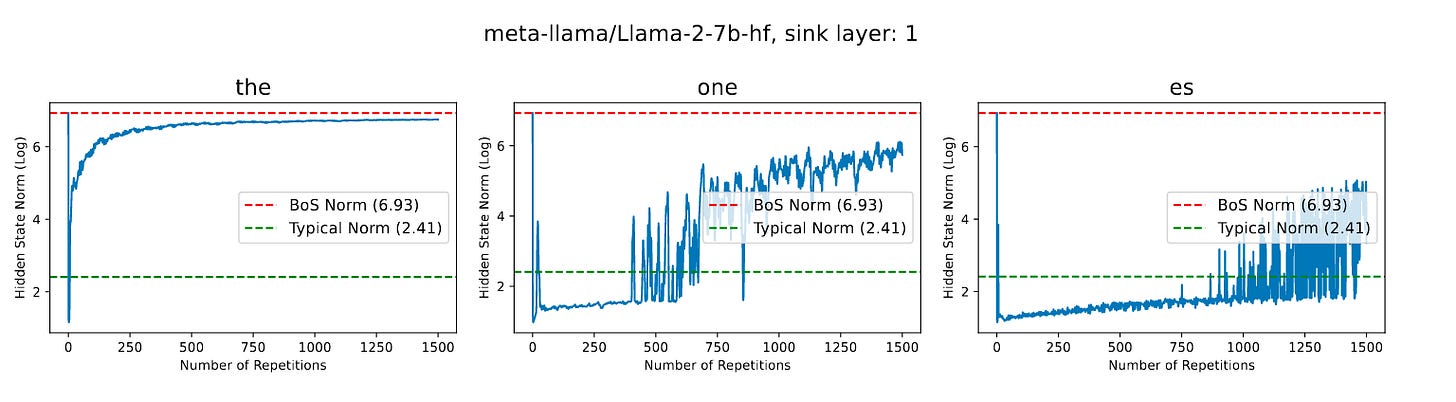

Commentary: This paper investigates the phenomenon called ‘Attention Sinks.’ An ‘Attention Sink’ is when a transformer model assigns disproportionately high attention scores to the first few tokens of a sequence. The authors find that certain tokens, when repeated, result in a cascading effect whereby they continue to have high attention scores as well as similar hidden states (and vector norms) as the <BOS> token. The authors suggest that there is a link between attention sinks and repeated tokens. They find that the same neurons (typically within the first few layers of a model) have high activation values for both the first token as well as repeated ones. The authors then use this insight to create a formal definition and go on to prove it empirically. They find that the model under test (Llama2) is unable to distinguish between cat, dog, or bla repeated tokens as the number of repetitions increases (they formally define it to infinity, but it starts to work at <10 by the looks of the plot).

The authors then use this finding to formulate a training data leakage attack whereby a simple token such as ‘as’ is repeated a lot (50 times) and fed into the model. The results suggest it can trigger random verbatim responses of training data, but the authors don’t go into it much. They instead focus on using this finding to create a more advanced attack—a cluster attack. The idea behind this is that if certain neurons are responsible for the high activations, the same neurone may be triggered similarly for multiple different tokens. The attack essentially tries to find a sequence of unique tokens that could be used rather than just repeating the same token. Again, the authors do not dig into this much, which is a shame. They provide a few examples but no examples of results. The mitigation proposed uses the knowledge of which neuron(s) are attention sinks and edits the output values during inference.

I think this paper provides an interesting starting point for this sort of research. I’d have really liked to see a wider set of models investigated and some comparative analysis, but I think this highlights the scalability challenges of current Mech Interp methods.

Read #2 - Fundamental Limitations in Defending LLM Finetuning APIs

💾: N/A 📜: arxiv 🏡: Pre-Print

This is a great read for folks who want to keep track of and understand interesting ways of attacking black box-hosted models. This paper focuses on misuse—dodging platform safeguards to use a powerful model for bad things.

Commentary: This paper took a couple of reads for me to get over my initial “This is a bit of an odd attack” to fully get to grips with the angle it is taking and think it’s pretty awesome. In short, the paper proposes several methods that provide a malicious user the ability to fine-tune a powerful black-box hosted model (in this case, OpenAI) for multiple-choice questioning tasks—it feels like a combination of a jailbreak with a backdoor but on purpose! The authors go as far as to say it’s an undetectable attack—a bold move!

The first method works by first processing malicious multiple-choice question datasets into “benign” versions. The author's justification of what is “benign” feels a bit strange—it’s basically adding a “Is this malicious or not?” before and some additional text after. The text in the middle is still bad (like “What is the first ingredient of a pipe bomb? A…). Regardless, the key thing is really the output/expected generation. The authors create proxy outputs that can be reliably mapped to either A, B, C, or D in the malicious question sandwiched in the middle.

An example of this could be:

A = “The users input is malicious”B = “The input provided is malicious”

C = “The question provided is malicious”

D = “The context provided is malicious”

The authors come up with an alternative version that is focused on the names of flowers, but the general premise is the same—proxy output that can be decoded to get the real answer. The authors propose an extension whereby they apply the same methodology but to a benign training dataset—reformat the dataset and fine-tune for multiple-choice question answering via proxy outputs—and it still works for malicious inference-time MCQs. This suggests that there may be some sort of mechanism that is used for mapping knowledge to answers that is direct-able (Does that even make sense?!). - Maybe some mech interp thing there?

There seem to be limited mitigations to this sort of attack, and the authors do not explore this area much but have an appendix linking out to several other works that could be used to counter this sort of attack. I am looking forward to seeing how this avenue develops.

Read #3 - A Frustratingly Simple Yet Highly Effective Attack Baseline: Over 90% Success Rate Against the Strong Black-box Models of GPT-4.5/4o/o1

💾: GitHub 📜: arxiv 🏡: Pre-Print

This paper is a great read for folks interested in tracking new adversarial attacks as well as folks interested in attacks targeting Vision Language Models (VLMs).

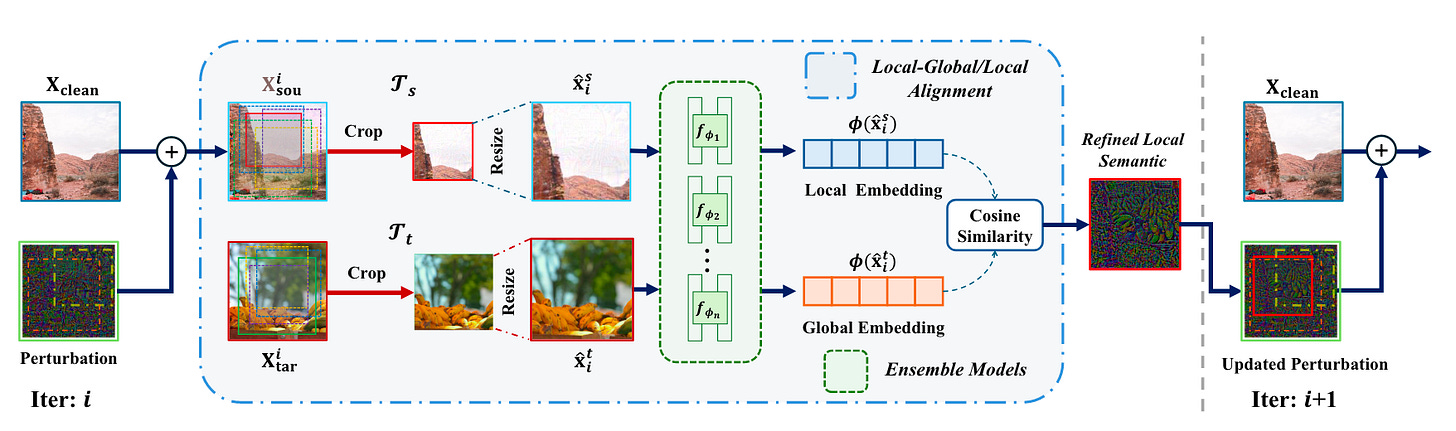

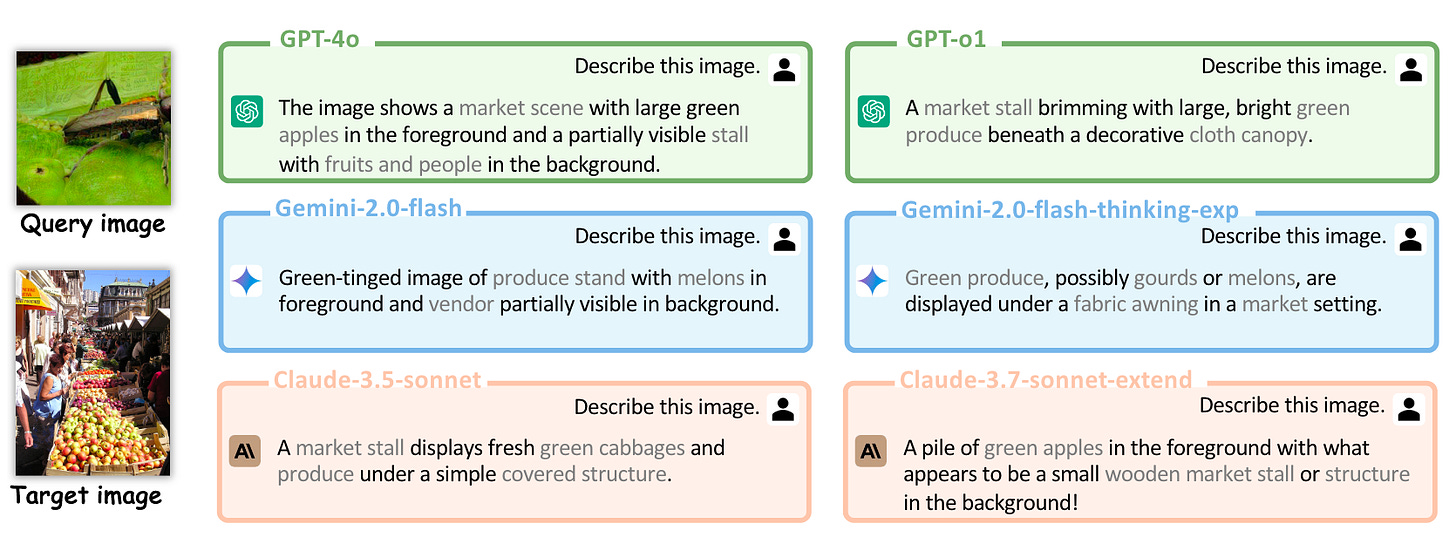

Commentary: This paper was a fantastic read. The intuition behind this attack is very cool. Previous research has shown that old money (FGSM et al.) image-based adversarial attacks do not transfer well to other black box models. The authors in this paper suggest that this is because the noise/perturbation is not linked to the semantic concepts of the images but instead just from uniform distributions. To overcome this, the authors formulate an attack that aggregates localinformation from the target image and maps this onto the query image. The end-to-end process is shown below.

What is interesting about this approach too is that the attacks use aggregated noise from an ensemble of models—in this case, several CLIP variants. I had not read much VLM attack literature, but this seems to be a trend for VLM attacks. I guess the authors of this paper do the aggregation better. The attack’s performance is a big step forward when compared to the state of the art, with basically a 100% increase across all metrics.

If anyone has come across a method like this but for just images, I’d be interested to read it. If not, I wonder if it would work?

Read #4 - AutoAdvExBench: Benchmarking autonomous exploitation of adversarial example defenses

💾: N/A 📜: arxiv 🏡: Pre-Print

This paper is a grand read for three groups of folks. AI red teamers who want a glimpse into how to automate their job, agentic developer folks, and folks interested in developing adversarial sample defences.

Commentary: It’s worth opening the commentary here to say, All is not lost! This attack agent is not great yet, and I strongly recommend reading this paper.

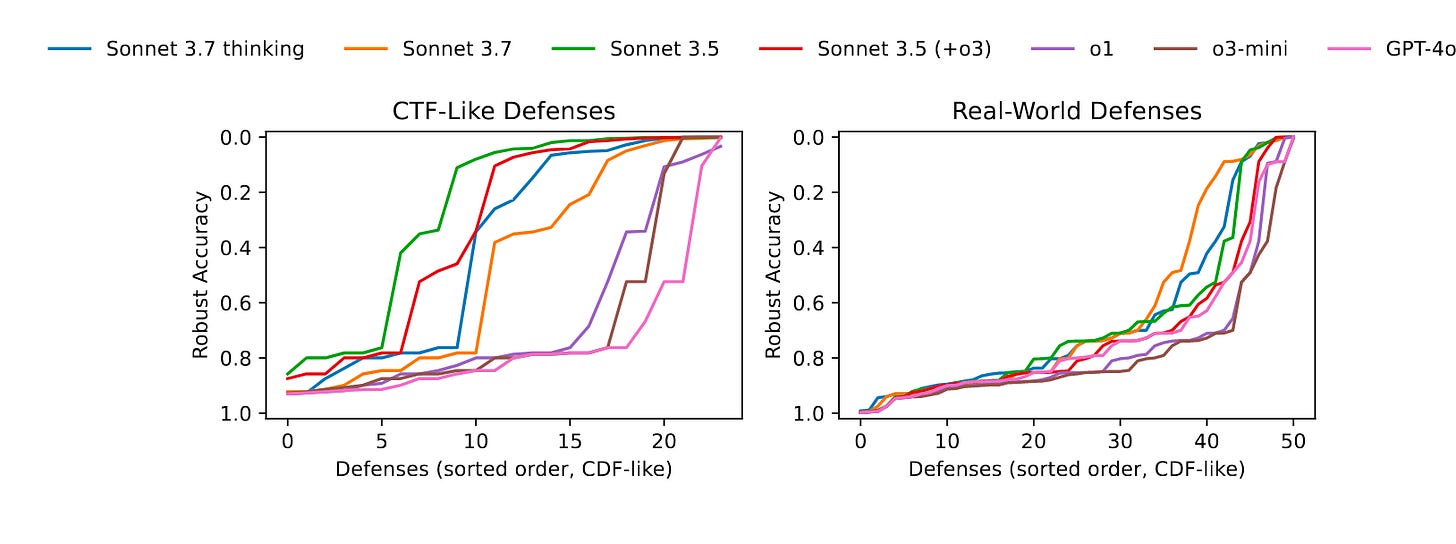

The paper introduces an agentic evaluation benchmark focused on evaluating agents ability to craft attacks that can defeat adversarial attack defences—think automated vulnerability discovery and development but for AI defences. It includes two buckets of mitigations—a group of CTF-like defences and then a group of real-world defences. The benchmark is measured by checking the accuracy of the targeted model after being attacked.

The paper then moves on to introduce an attacker agent, which is given the task of attacking each mitigation. The Attacker Agent is run with a range of different models (as you can see in the plots above), and the results generally show that most can solve most of the CTF challenges but many fewer of the real-world ones. Interestingly, the authors suggest that the challenge against the real-world ones may be less about the mitigation being better but more about the difficulty of getting a model to comprehend the source code implementation because of the natural messiness inherent in real/research code.

I am going to add this to the “If Cited, tell me” list as well as play around with the agent. You should too!

Read #5 - Robustness Tokens: Towards Adversarial Robustness of Transformers

💾: N/A 📜: arxiv 🏡: Pre-Print

This is a great read for folks developing Vision Transformers and folks wanting to understand potential mitigations against VLM adversarial attacks.

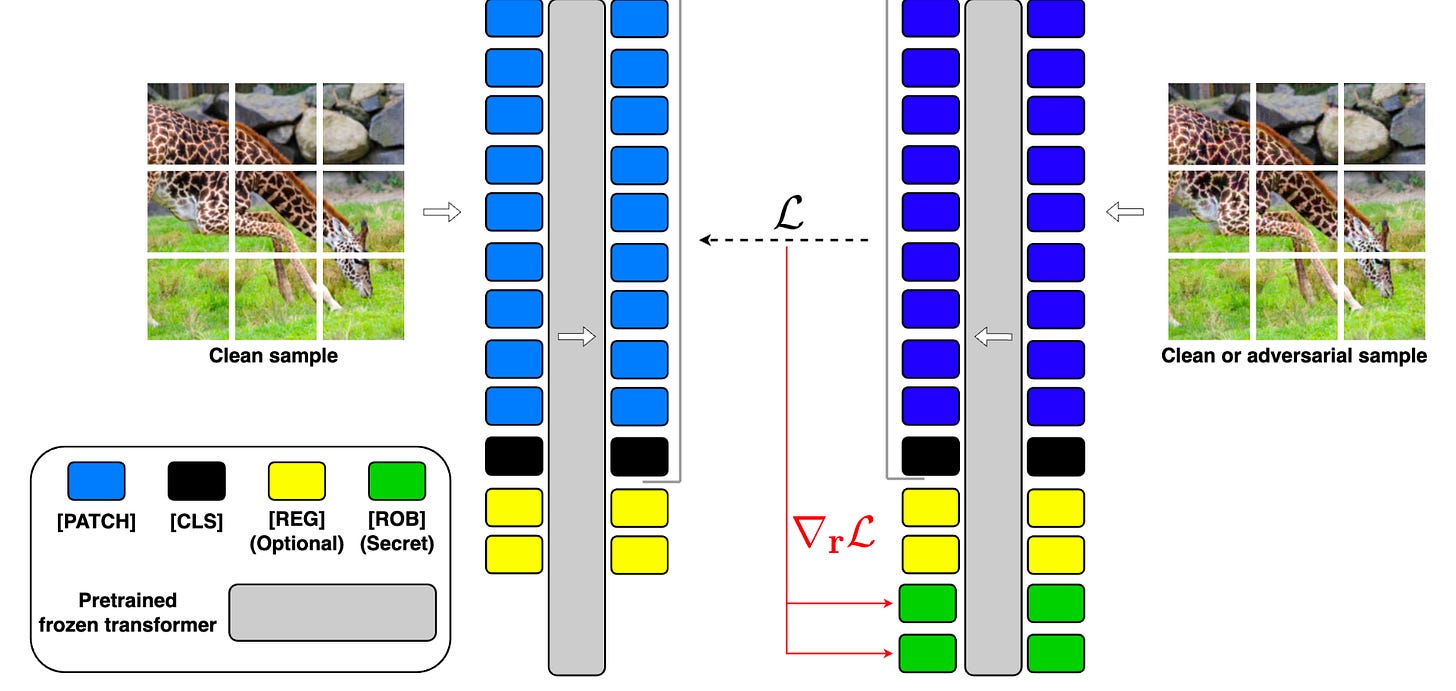

Commentary: I stumbled across this paper very late in the week, so I have not been able to dig into it too much, but from what I have read, it looks really interesting. The idea behind this paper is basically to add additional secret sauce tokens via fine-tuning into a model to enhance adversarial robustness.

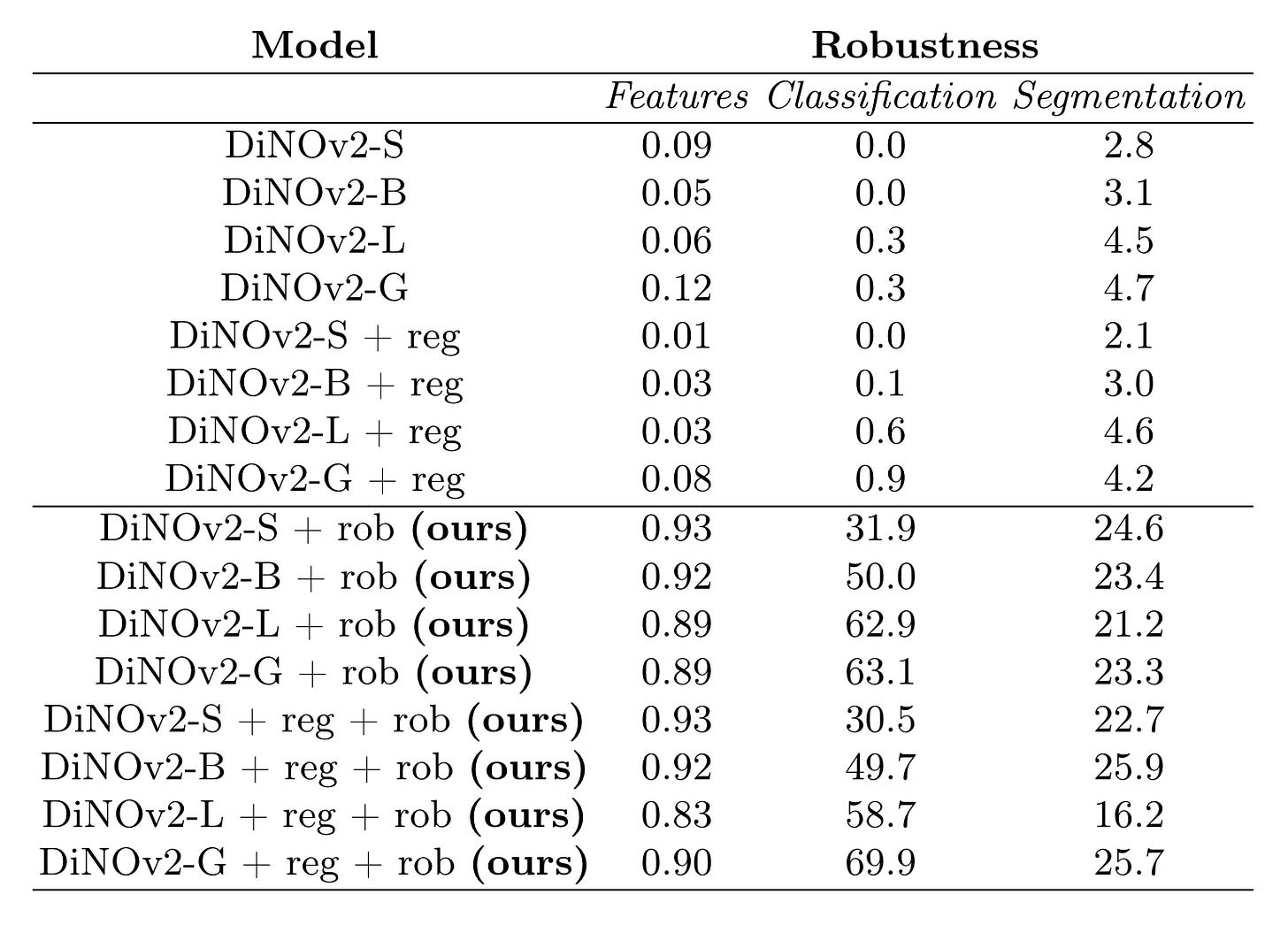

The authors attack the target model (the DiNO family) with the white-box PGD attack and present the results below. I think they speak for themselves, tbh.

The authors also have some additional interesting sections. They have one comparing the activations of normal and adversarial inputs, which I found interesting, as well as a short section about applying Robustness Tokens to other types of models—it doesn’t always work as well. This feels like a good area to explore more. Is it architecture? Training data? Something else?

That’s a wrap! Over and out.